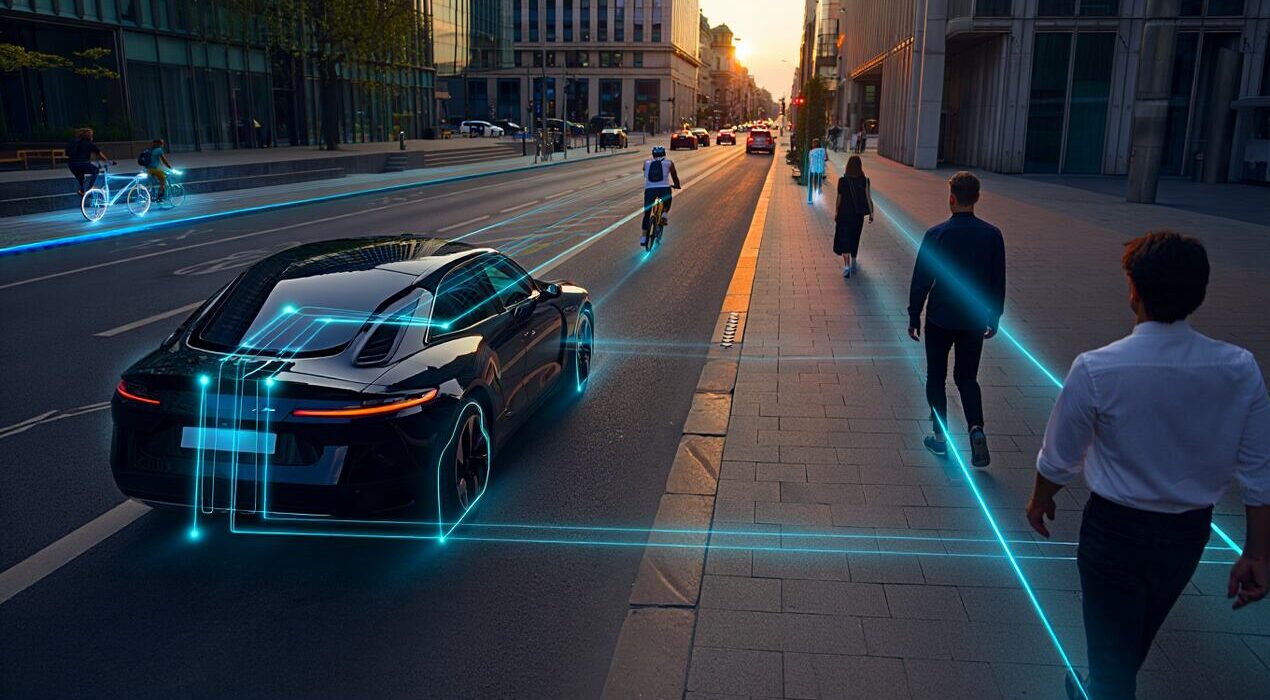

New AI Model Predicts Human Actions, Making Self-Driving Cars Smarter

A new AI model can predict what people may do next. It does not just react to movement. Instead, it uses visual and contextual clues. Researchers from Texas A&M University and KAIST developed the system. They named it OmniPredict. Its main goal is safer autonomous driving. OmniPredict uses a Multimodal Large Language Model. This technology also powers advanced chatbots. However, here it predicts human actions. The system combines images with context. For example, it reads posture, gaze, and surroundings. As a result, it forecasts likely behavior in real time. Early tests show strong performance. Impressively, the model works well without specialized training.

Smarter Streets and Safer Cars

Traditional self-driving systems only react. OmniPredict adds human-like street awareness. Therefore, vehicles can plan ahead instead of braking late.This approach could reduce close calls. In addition, traffic flow may feel smoother. Pedestrians could also feel safer at crossings.Researchers believe this shift matters. Fewer surprises mean fewer accidents. As a result, cities may become easier to navigate.OmniPredict has wider potential. It could help in emergency or military settings. For example, it may flag signs of stress or danger early.The AI can read hesitation and body orientation. Therefore, it supports faster decisions in complex environments. Importantly, it aims to assist, not replace, people.

Tested Under Real Pressure

The team tested OmniPredict on tough datasets. These included hidden pedestrians and unusual behavior. The model still performed well.It reached 67% accuracy. That score beats similar systems by 10%. In addition, it responded faster and adapted better.

A More Intuitive AI Future

OmniPredict remains a research model. However, it points to a smarter future. AI systems may soon understand intent, not just motion.When machines grasp human behavior, safety improves. As a result, the road ahead looks more intuitive for everyone.